What is Apache Kafka? What do you need to know?

Imagine a busy marketplace with vendors constantly generating new goods (data). You need a reliable way to deliver these goods quickly and efficiently to customers (applications) who need them. That's where Apache Kafka comes in – it's the speedy delivery truck of the data world.

What is Apache Kafka?

Think of Kafka as a software platform that acts as a central data-streaming hub. It is a great fit for real-time applications like social media feeds, fraud detection, and stock tickers, as it can efficiently manage huge amounts of data flowing in and out.

How does Kafka work?

Here's a simplified breakdown of Kafka's architecture:

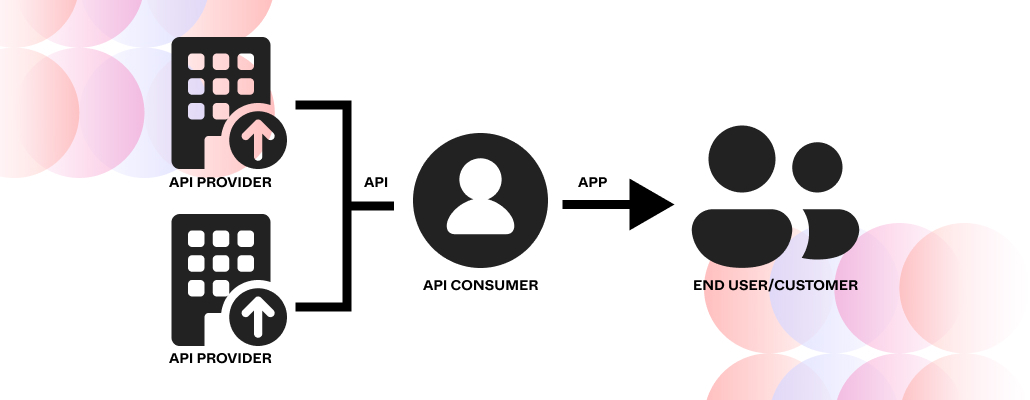

Producers

These are like the vendors in our marketplace, constantly generating new data (products). They send data to Kafka in the form of messages.

Topics

Think of these as different delivery routes. Each topic holds messages related to a specific category or subject. For example, you might have a topic for "user activity," another for "product updates," and so on.

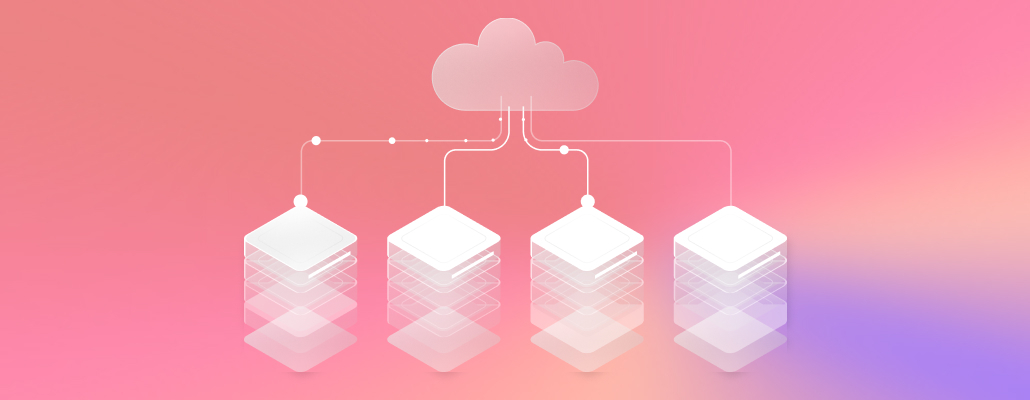

Brokers

These are the hardworking delivery trucks that handle the actual transportation of messages. They store messages in partitions, which are like individual compartments within each topic.

Consumers

These are your customers waiting for specific data. They subscribe to the topics they're interested in and receive messages as they become available.

Important things to know about Kafka

Scalability

Kafka can handle mountains of data, making it ideal for large-scale applications. You can easily add more brokers to distribute the workload and scale your system up or down as needed.

High Performance

Kafka is super fast. It can deliver messages with very low latency, meaning near-real-time data processing.

Durability

Don't worry about losing your precious data. Kafka stores messages multiple times across different brokers, ensuring they're safe even if something goes wrong.

Flexibility

Kafka is like a Swiss Army knife for data streaming. It can work with various data types, programming languages, and integration tools, making it adaptable to your specific needs.

Kafka Consumers

Consumers are essential players in the Kafka ecosystem. They're responsible for fetching and processing messages from topics they're subscribed to. Here are some important points to know about consumers:

Consumer Groups

Think of these as groups of customers with similar interests. Consumers can join groups to share the workload of processing messages from a topic. This helps distribute the work evenly and prevents overloading any single consumer.

Offsets

These are like bookmarks that keep track of which messages a consumer has already processed. This ensures that consumers don't miss any data, even if they restart or encounter issues.

Committing Offsets

Once a consumer has processed a message, it "commits" the offset, acknowledging that it's been handled. This allows other consumers in the group to pick up where the previous one left off.

Kafka 3

The latest version, Kafka 3, brings exciting new features to the table:

Improved Security

Kafka 3 offers enhanced authentication and authorization capabilities, making it more secure for sensitive data.

Stream Processing Connectors

These connectors make it easier to integrate Kafka with popular stream processing frameworks like Apache Flink and Apache Spark.

Exactly-Once Semantics

This ensures that messages are delivered and processed exactly once, even in the face of failures or retries.

The Power of Kafka

Kafka is used by some of the biggest companies in the world for a variety of purposes:

Netflix

Streams video content to millions of viewers globally with high performance and reliability.

Handles real-time updates and notifications for its massive user base.

Uber

Tracks ride requests and driver locations in real time, ensuring a seamless user experience.

Amazon

Powers various data pipelines for its e-commerce platform, enabling efficient order processing and delivery.

Conclusion

Kafka is worth exploring if you are a data engineer, or just curious to know about real-time data streaming. It’s a great tech tool to help you build not just an application that is scalable and efficient but also secure that stands the test of time in the data-driven world. Revolutionise your data journey with Kafka. Visit Tangent blog for more.

FAQs

1 . What is Kafka used for?

Kafka shines in real-time data streaming scenarios. Companies like Netflix use it for video streaming, LinkedIn for notifications, Uber for tracking rides, and Amazon for order processing. It excels at handling large data volumes quickly and reliably.

2. How does Kafka work?

Think of it like a delivery system for data. Producers send data (messages) to specific topics (categories). Brokers (delivery trucks) store messages in partitions (compartments). Consumers (customers) subscribe to topics and receive messages as they arrive. It's fast, scalable, and secure.

3. What are the benefits of using Kafka?

Scalability: Handle mountains of data by adding more "delivery trucks" (brokers).

High Performance: Deliver messages super fast for near-real-time processing.

Durability: Don't worry about losing data – it's stored securely across multiple locations.

Flexibility: Works with various data types, languages, and tools, adapting to your needs.